Music can now be embedded with subliminal commands to Siri and Alexa

Any new piece of technology often reveals its benefits far before the downsides become apparent.

A recent Gallup poll showed that 22% of Americans use devices such as Amazon Echo or Google Assistant in their homes. This is a remarkably quick uptake given the Echo only launched widely in America in June, 2015 with Google Assistant coming the following May.

Not surprisingly, privacy issues have plagued these devices from the start, especially given the very premise of the invention requires it to listen into — and presumably record and store — your conversations. After all, how else will it know what you want from it?

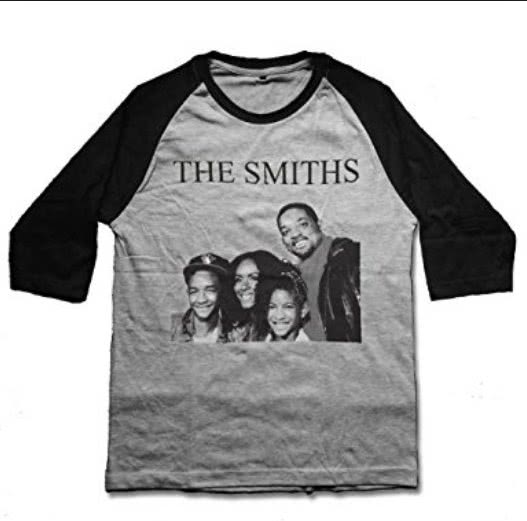

A NY Times report from late March highlights a particularly worrying patent application from Google in which such a device could — quite hypothetically, of course — detect a Will Smith t-shirt “on a floor of the user’s closet”, clock this point of interest, and later show “a movie recommendation that displays, ‘You seem to like Will Smith. His new movie is playing in a theater near you.’” Eek!

It goes without saying that this is creepy. Plus, Will Smith’s films vary wildly in regards to quality.

In 2016 a group of students from University of California, Berkeley, and Georgetown University highlighted an early concern, by hiding Siri commands in white noise, which were used to manipulate a command-based speaker/recorder.

This will confuse the algorithm

This same group of students have since perfected this technology, learning how to embed commands into recordings, with undetectable — at least by humans — messages that can do anything from switch your device to airplane mode to add a specific shopping item to your cart.

The future applications of this are truly frightening, and as the past has often shown (see: torrents, .mp3s, piracy-proof CDs, the little tabs on the top of cassettes) any type of file filtration system that purports to detect such encoded messages — and protect users suddenly scared of using streaming services — will quickly be defeated by new technology that bypasses this security.

After all, there is far more money in making the machine that detects police radars than there is in making a detector-proof radar.

“We wanted to see if we could make it even more stealthy,” said Nicholas Carlini of the latest subliminal messaging technology. Carlini is a Ph.D. student in computer security at U.C. at Berkeley, and was one of the authors of this recent study. He believes people are already using this technology for nefarious means. “My assumption is that the malicious people already employ people to do what I do” he warned.

Unlike with their 2016 white noise experiments, Carlini and co. don’t even have to hide the message under other audio. “Not only can we make speech recognise as a different phrase, we can also make non-speech recognise as speech (with a slightly larger distortion to the audio)”, Carlini writes on his website.

He explains: “How does this attack work? At a high level, we first construct a special “loss function” based on CTC Loss that takes a desired transcription and an audio file as input, and returns a real number as output; the output is small when the phrase is transcribed as we want it to be, and large otherwise.

“We then minimise this loss function by making slight changes to the input through gradient descent. After running for several minutes, gradient descent will return an audio waveform that has minimised the loss, and will therefore be transcribed as the desired phrase.”

You’re forgiven if you couldn’t exactly follow that. How it is achieved on a technical level is largely irrelevant; it’s the looming threat that this represents.

Even if this technology becomes as successful a scam as the Nigerian Prince, the idea of it is still rather unsettling: that any musical file you are listening to could be subliminally informing your robot assistant, to whom you have granted unlimited access to your files, to complete tasks you may never learn of.

When making Jaws, Spielberg was running terribly over budget and couldn’t afford to replace the unrealistic animatronic shark that had been created — at great expense — for the film. In the end they only ever showed the ominous fin coming out of the water – which was more frightening than anything ’80s robotics could conjure up.

Even if we are convinced these subliminal audio attacks are probably not encoded deep within our music, we won’t be able to ever be truly sure.

After all, it’s the possibility of what lurks beneath that is often the most terrifying.

FURTHER READING: YOUTUBE REVEALS THAT ’80S PROM SONGS ARE FOREVER

The Flaming Lips – Yoshimi Battles the Pink Robots Pt. 1

The article was originally published on The Industry Observer

.jpg)